Frank Costa – Manager, System Integration Services

Frank Costa – Manager, System Integration Services

Bob Sloma – Digital Transformation SME

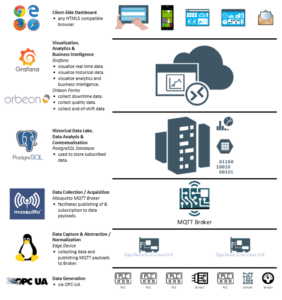

The rise of the Industrial Internet of Things (IIoT) has revolutionized the manufacturing industry, bringing about a new era of smart factories and intelligent automation. The integration of machines, sensors, and other devices through a network of connected systems has generated vast amounts of data, enabling manufacturers to gain unprecedented insights into their operations and make data-driven decisions. With IIoT, manufacturers can achieve optimized production, reduced downtime, improved quality control, and enhanced safety in the workplace, making it a game-changer for the industry. Within this context, we present an open-source technology stack Sandalwood has developed to help small and medium-size manufacturers experiment with this technology that can be further enhanced and built upon with paid out-of-the-box solutions over time.

Data Generation

Starting at the lowest level, data is generated by various control systems and/or sensors. This raw data is collected via edge devices. The OPC-UA protocol, which is a pub-sub protocol, is used to publish data from source devices.

Data Capture & Abstraction/Normalization

Edge devices (which in our case are Linux-based) are used to “abstract” or normalize the incoming data to present a uniform and structured flow of data. The abstraction process can also occur at the next level up, but it is always better to push data abstraction to as low of a level as possible.

At this level, an OPC-UA to MQTT connector, written in Python, is used to subscribe to the device OPC-UA server, process and normalize the published data messages to a common JSON payload format, and publish them to a Mosquitto MQTT broker (which acts as a single data collection point). The OPC-UA to MQTT connector configuration requires:

- The OPC-UA server’s IP address and port.

- The nodes (tags) from which to collect the data.

- The MQTT broker hostname/IP address and port.

- A unique MQTT client ID.

- The MQTT payload header data key/value pair format to define the message type and device location.

The connector can utilize a JSON schema file to validate the data structure before sending the payload. Devices directly publishing data to the broker are required to have their telemetry data abstracted and normalized by another service subscribed to a raw data topic on the broker, and then republished to another topic that follows the standard data structure.

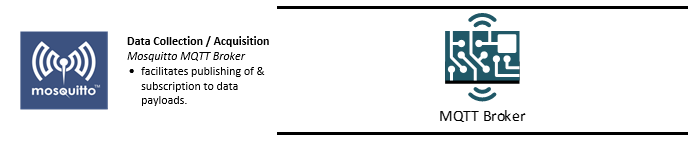

Data Collection/Acquisition

This level is used to consolidate all incoming data streams into one single point of contact. As previously mentioned, data abstraction/normalization can also take place at this level. This is the natural break point between on-prem and cloud-based applications; in cloud-based applications, data would be pushed from this level to the appropriate cloud connectors.

As denoted in the previous section, this level is also used to support any real-time data exchanges as:

- It is the last stage before historical data ingestion.

- Data at this point should be able to be presented in a commonly abstracted (or normalized) manner.

Located between the raw data collection and Ingestion layer, the data acquisition layer can be used to validate the structure and content of the data as well as filter out non-conforming data streams based on JSON schemas, although it is preferable to do this at the abstraction/normalization level.

In our case, the Mosquitto MQTT broker allows agents on the PostgreSQL server to subscribe to them, allowing for their consumption to the open-source database. At the Visualization level, the broker also allows Grafana to subscribe to the same channels for real-time data visualization.

In our next post, we will review the last three layers of the IIOT4Free technology stack and their benefits to a manufacturer.

Why Sandalwood?

Why Sandalwood?

We are a one-stop-shop for launching job rotation for any employer from conception to implementation. Our experts tailor our services to meet the needs of our customers by collaborating with them throughout the entire process. We do not offer cookie cutter solutions for job rotation because the needs of employers vary significantly.

We are a one-stop-shop for launching job rotation for any employer from conception to implementation. Our experts tailor our services to meet the needs of our customers by collaborating with them throughout the entire process. We do not offer cookie cutter solutions for job rotation because the needs of employers vary significantly. Why Sandalwood?

Why Sandalwood?

Sandalwood is pleased to offer solutions above and beyond the traditional ergonomic assessments. With an in-depth knowledge of various digital human modelling software suites, integration and adoption to your health and safety programs has never been easier. Sandalwood is experienced in ergonomic program design as well as industry leaders in digital human modelling services. We have a diverse team that is able the leverage the results from the digital human model to provide in depth risk assessments of future designs and current state. Sandalwood is also able to pair these assessments with expertise and provide guidance on the best solution for you. Sandalwood is also on the forefront of emerging technologies and able to integrate Motion capture, Wearables, and extended or virtual reality into your ergonomic program.

Sandalwood is pleased to offer solutions above and beyond the traditional ergonomic assessments. With an in-depth knowledge of various digital human modelling software suites, integration and adoption to your health and safety programs has never been easier. Sandalwood is experienced in ergonomic program design as well as industry leaders in digital human modelling services. We have a diverse team that is able the leverage the results from the digital human model to provide in depth risk assessments of future designs and current state. Sandalwood is also able to pair these assessments with expertise and provide guidance on the best solution for you. Sandalwood is also on the forefront of emerging technologies and able to integrate Motion capture, Wearables, and extended or virtual reality into your ergonomic program.